Smaller, Cheaper, Faster and Fine-Tuned

Easily fine-tune and serve any open-source LLM including Llama 2, Mistral, and Zephyr—in your cloud, using your data, on fully managed cost-efficient infra built by the team that did it at Uber, Apple, and Google.

Private

Privately deploy and query any open-source LLM in your VPC or Predibase cloud.

Cost Efficient

Dynamically serve many fine-tuned LLMs on a single GPU for 100x+ in cost savings.

Efficient

No more training errors. Reliably fine-tune with built-in optimizations like LoRA and autosizing compute.

Interested in high-end GPUs for the largest LLMs?

Request Access to A100 / H100 GPUs

Endless Applications

Finetune and serve any open-source ML and large language model— all within your environment, using your data, on top of a proven scalable infrastructure.

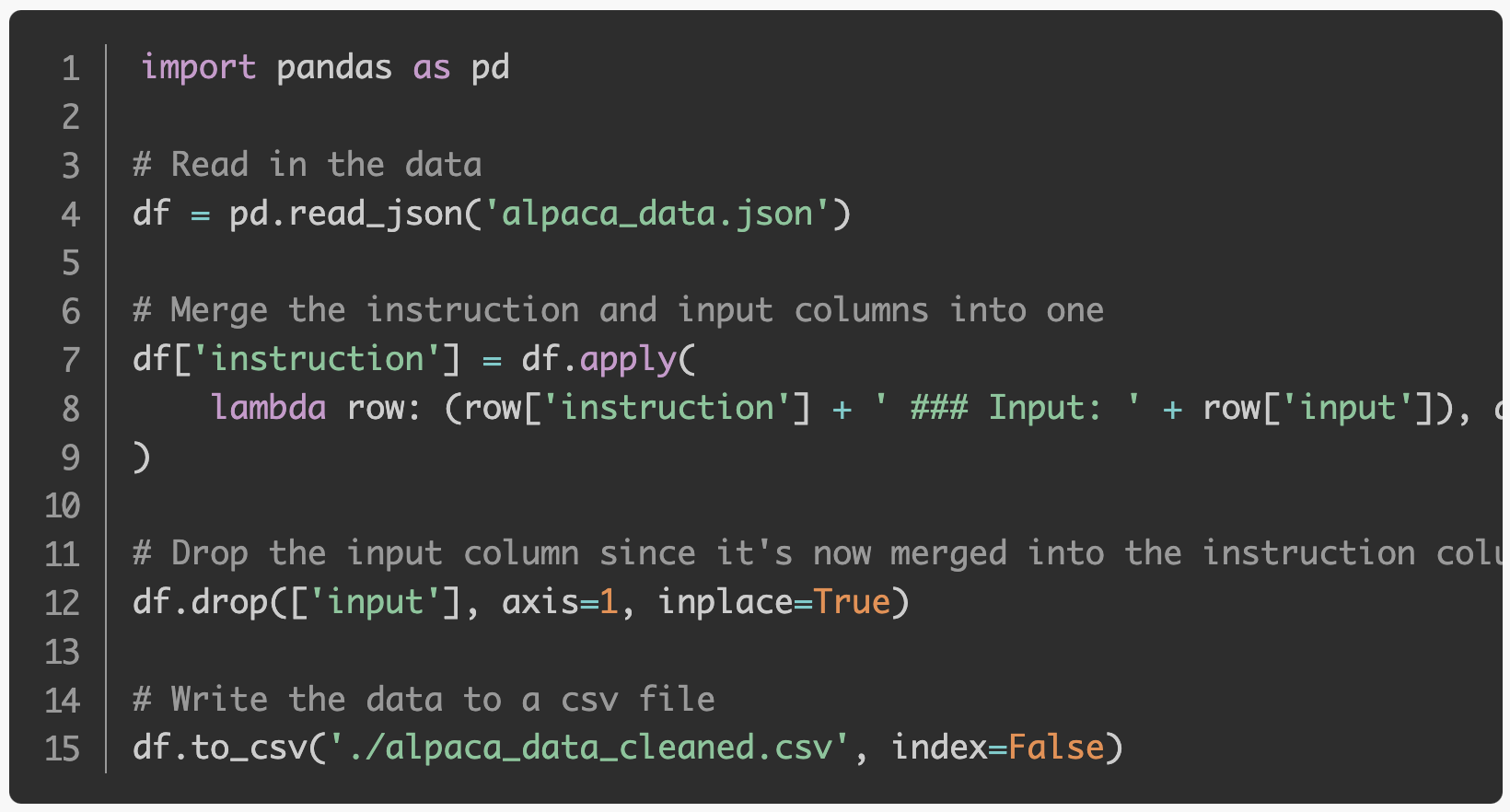

Fine-Tune LLaMa 2

Fine-tune LLaMa-2 on your data with scalable LLM infrastructure